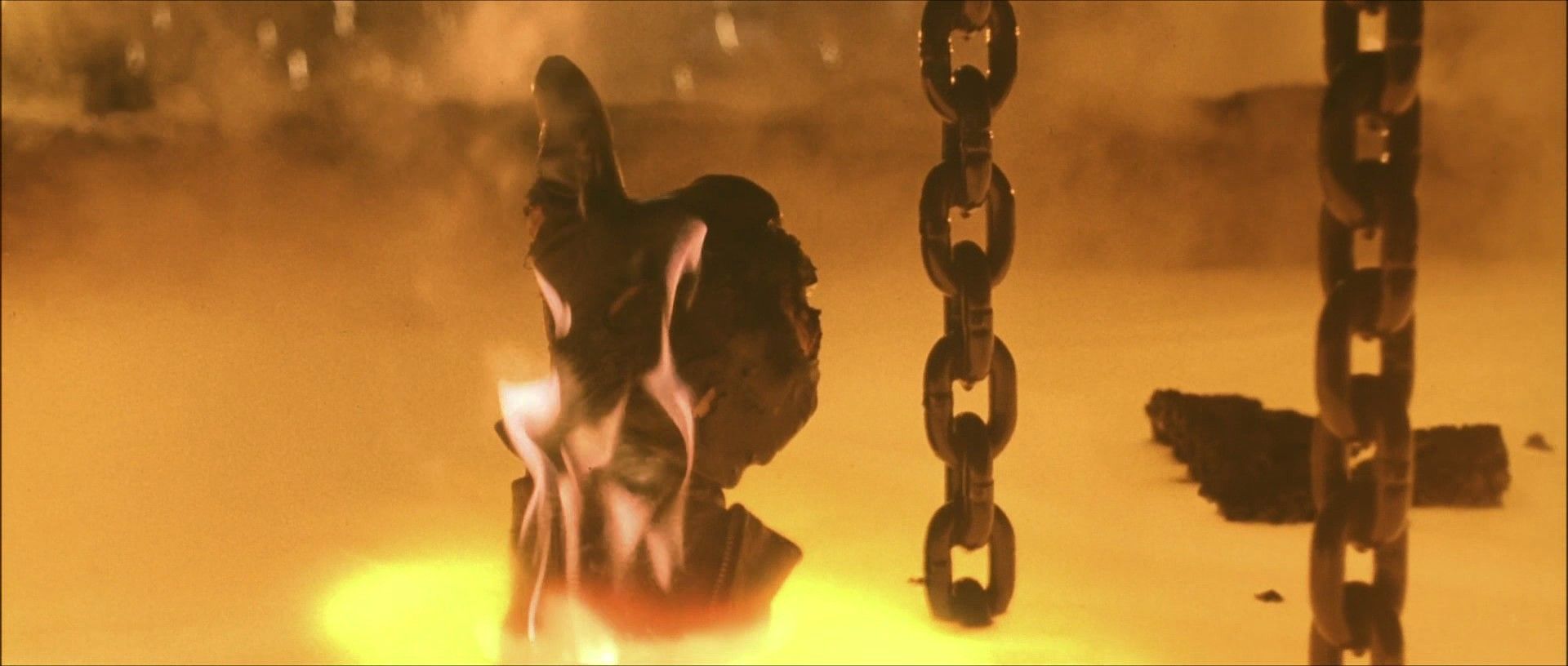

Where the heck is John Connor when we need him?

Humans don't like the idea of not being at the top of the food chain; having something we've created taking power over us isn't exactly ideal. It's why folks like Tesla mastermind Elon Musk and noted astrophysicist Stephen Hawking are so determined to warn us of the terrifying implications that could culminate in a Skynet situation where the robots and algorithms stop listening to us. Google is keen to keep this sort of thing from happening, as well, and has published a paper (PDF) detailing the work its Deep Mind team is doing to ensure there's a kill switch in place to prevent a robocalypse situation.

Essentially, Deep Mind has developed a framework that'll keep AI from learning how to prevent -- or induce -- human interruption of whatever it's doing. The team responsible for toppling a word Go champion hypothesized a situation where a robot was working in a warehouse, sorting boxes or going outside to bring more boxes in.

The latter is considered more important, so the researchers would give the robot a bigger reward for doing so. But human intervention to prevent damage is needed because it rains pretty frequently here. That alters the task for the robot, making it want to stay out of the rain, and then adopting the human interruption as part of the task rather than being a one-off thing.

Most Users Ever Online: 698

Currently Online:

39 Guest(s)

Currently Browsing this Page:

1 Guest(s)

Top Posters:

easytapper: 2149

DangerDuke: 2030

groinkick: 1667

PorkChopsMmm: 1515

Gravel Road: 1455

Newest Members:

Forum Stats:

Groups: 1

Forums: 12

Topics: 11482

Posts: 58640

Member Stats:

Guest Posters: 2

Members: 19842

Moderators: 0

Admins: 1

Administrators: K

Log In

Log In Home

Home

Offline

Offline